As businesses increasingly move their operations to the cloud, it has become more important than ever to ensure the security and performance of cloud-based networks. Cloud environments like Amazon Web Services (AWS) offer a wide range of tools and services that can help users manage their networks, but it can be difficult to gain visibility into network traffic and activity. One of the key tools available for monitoring and optimizing network performance on Amazon Web Services (AWS) is VPC Flow Logs.

In this blog, we’ll explore what VPC Flow Logs is, how it works, and why AWS users might need it. We’ll also provide a short tutorial on how to enable them.

Gain invaluable insights

VPC Flow Logs is a logging mechanism that provides detailed information about the network traffic flowing in and out of your Virtual Private Cloud (VPC) on Amazon Web Services (AWS). By enabling VPC Flow Logs, you can gain valuable insights into the types of traffic entering and leaving your VPC, in addition to metrics, such as the amount of data transferred.

This useful feature can provide a wealth of information, including source and destination IP addresses, ports, protocol, traffic direction (ingress or egress), and more. Analyzing this information can be highly beneficial for a range of purposes such as identifying and resolving network issues, improving network performance, and monitoring network traffic for security-related concerns.

Debug and analyze network issues

By using VPC Flow Logs, you can also gain deeper insights into high traffic rates within a specific VPC or even at the subnet level. These insights can be invaluable for debugging and analyzing network issues, as well as identifying the reasons behind high costs.

For example, if a client is concerned about the high cost of their NAT Gateway transfer, you can enable VPC Flow Logs in the subnet associated with the NAT Gateway. This will provide detailed information about source and destination IP addresses, ports, and packet counts, sorted by the highest number of packets transferred. With this information, you can identify the reason for the high usage of the NAT Gateway and determine if it is justified or if additional actions are required to minimize costs.

The greatest benefit: Analyze your logs with ease

One of the main benefits of VPC flow logs is that they can be analyzed by using various different AWS Services, for example, CloudWatch Logs insights that can help reduce text and make it easier for users to analyze their logs. This is because VPC flow logs can capture a large amount of data about network traffic, but they do so in a structured format that can be easily analyzed using tools and scripts.

For example, instead of having to manually parse through text-based logs to identify patterns and anomalies in network traffic, you can use VPC flow logs to quickly identify issues such as security threats or performance problems. By analyzing the flow logs, you can gain insights into how your applications and services are using network resources, and identify areas where you can optimize network configurations.

Get Familiar with Flow Logs Costs

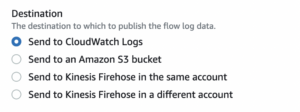

The VPC Flow Logs service incurs a charge for streaming logs to a destination, which is based on the amount of data streamed in gigabytes (GB) to the chosen endpoint. The cost of streaming varies depending on the endpoint type and the region it is located in. The available endpoints are S3 Bucket, CloudWatch log groups, and Kinesis Firehose.

For a detailed breakdown of the costs associated with the VPC Flow Logs service, please refer to the “Logs” tab under the “Vended Logs” section on the AWS pricing page (assuming you are in the Paid Tier). There, you will find more in-depth explanations about the different costs associated with the service, and further information on how to calculate the total cost based on your specific usage requirements.

A short guide to enabling Flow Logs

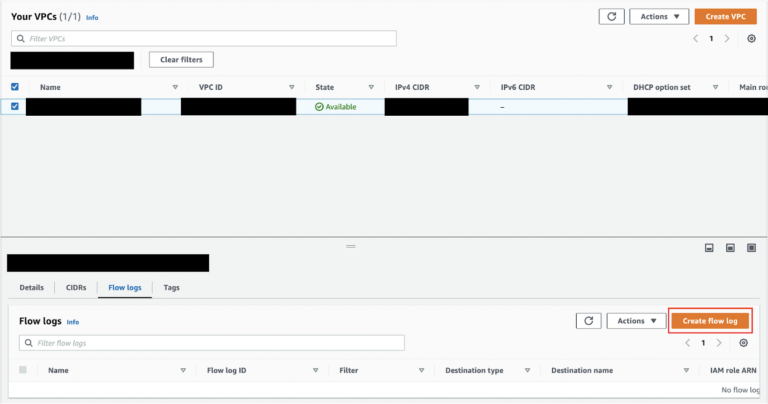

Flow Logs can be enabled on the entire VPC or the subnet level

- Go to the AWS console and go to VPC.

- Find the desired VPC or subnet, click on the checkbox next to the desired VPC or subnet, and go to the Flow Logs tab. Click on ‘Create flow log’.

- In the Flow Log page, do the following:

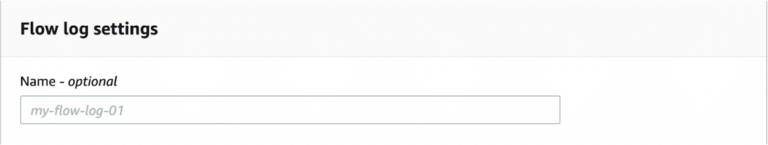

- Give a name to the Flow Log.

-

- Since we want to focus on the CloudWatch destination, check that the ‘Send to CloudWatch Logs’ option is selected.

-

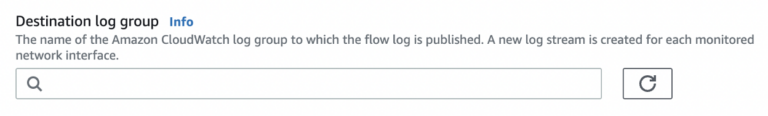

- Create a log group and choose it (How to create a log group).

-

- Create an IAM role for log streaming (How to create an IAM role for CloudWatch destination streaming).

-

- Choose either ‘AWS default format’ for your log, or choose a ‘Custom format’(How to choose the log record format).

NOTE: It is important to mention that once the Flow Log is created, the log record format, IAM role, and destination log stream cannot be changed. To change one of these parameters, the previous Flow Log needs to be deleted, and an entirely new one has to be created with the correct configuration.

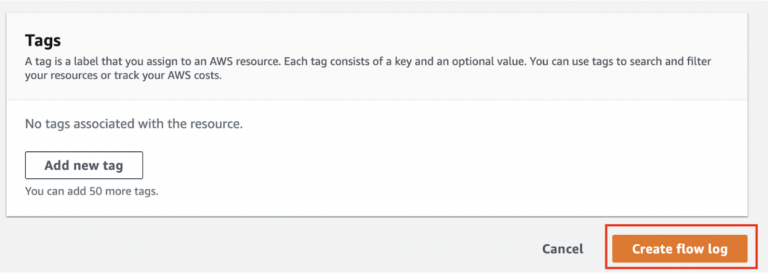

- Click on ‘Create flow log’.

- After creating a flow log, the page should look like this:

How to create a log group

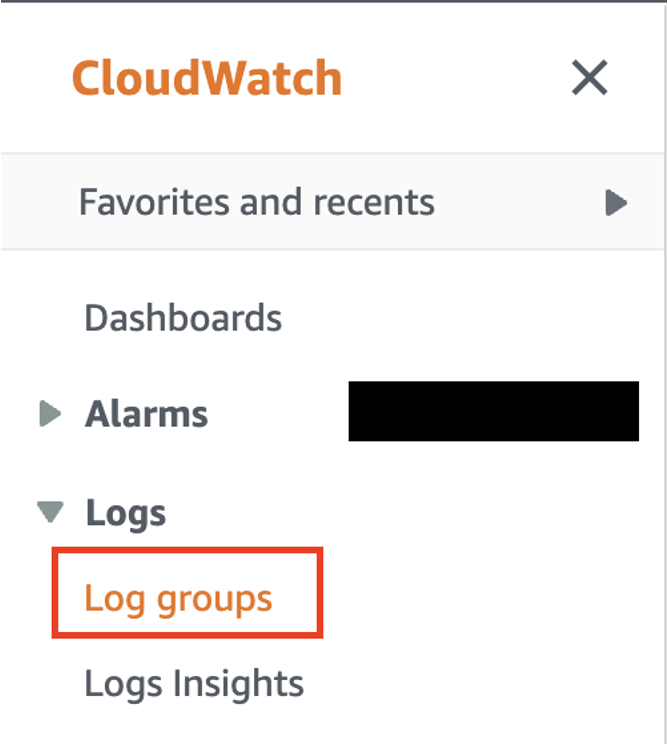

- Go to CloudWatch and open the menu on the left side. Choose ‘Log groups’.

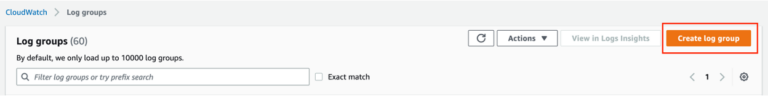

- Click on ‘Create log group’.

- On the ‘Create log group’ page, give a name to the new log group and click ‘Create’.

How to create an IAM role for CloudWatch destination streaming

- Go to the IAM console and create a new role. Try to choose a name related to the flow logs stream to CloudWatch, e.g. VPCFlowLogStreamToCloudWatch) Follow this AWS documentation: https://docs.aws.amazon.com/vpc/latest/userguide/flow-logs-cwl.html.

- In the role, choose ‘Custom trust policy,’ copy the trust policy for the streaming from the official documentation, and paste it.

=== START POLICY ===

{

“Version”:”2012-10-17″,

“Statement”:[

{

“Effect”:”Allow”,

“Principal”:{

“Service”:”vpc-flow-logs.amazonaws.com”

},

“Action”:”sts:AssumeRole”

}

]

}

=== END POLICY ===

- After clicking ‘Next,’ you’ll be directed to the policies page where you can choose ‘Create policy’ in order to begin creating a new policy. Again, try to name it in a way that corresponds to the role name. Choose ‘JSON editor’ for the permissions, copy the permissions for the streaming from the official documentation, and paste it.

=== START POLICY ===

{

“Version”:”2012-10-17″,

“Statement”:[

{

“Effect”:”Allow”,

“Action”:[

“logs:CreateLogGroup”,

“logs:CreateLogStream”,

“logs:PutLogEvents”,

“logs:DescribeLogGroups”,

“logs:DescribeLogStreams”

],

“Resource”:”*”

}

]

}

=== END POLICY ===

How to choose the log record format

You can choose the default option for the Flow Log form. This gives you basic information, such as source or destination addresses and ports, as well as packet count and size.

${version} ${account-id} ${interface-id} ${srcaddr} ${dstaddr} ${srcport} ${dstport} ${protocol} ${packets} ${bytes} ${start} ${end} ${action} ${log-status}

Alternatively, choosing a customized log record can give you additional information such as flow direction (ingress or egress traffic), traffic path, and more. To read more about the options, follow this link: Available fields for Flow Log records formatting.

Once the flow logs are created with the specified or default fields, it is important to know the order in which they are created. This is critical for later querying. In order to access this information, open the AWS CLI, and enter the following command:

=== START CODE ===

aws ec2 describe-flow-logs –flow-log-ids “<flow log id>”

=== END CODE ===

The output should look like this.

=== START OUTPUT ===

{

“FlowLogs”:[

{

“CreationTime”:”<flow log creating time stamp>”,

“DeliverLogsPermissionArn”:”arn:aws:iam::<account_id>:role/<flow log streaming role>”,

“DeliverLogsStatus”:”SUCCESS”,

“FlowLogId”:”flow-log-id”,

“FlowLogStatus”:”ACTIVE”,

“LogGroupName”:”<destination log group streamed to>”,

“ResourceId”:”<VPC ID>”,

“TrafficType”:”ALL”,

“LogDestinationType”:”cloud-watch-logs”,

“LogFormat”:”${account-id} ${action} ${bytes} ${dstaddr} ${dstport} ${end} ${flow-direction} ${instance-id} ${interface-id} ${log-status} ${packets} ${pkt-dst-aws-service} ${pkt-dstaddr} ${pkt-src-aws-service} ${pkt-srcaddr} ${protocol} ${srcaddr} ${srcport} ${start} ${traffic-path}“,

“Tags”:[

{

“Key”:”Name”,

“Value”:”<flow log name>”

}

],

“MaxAggregationInterval”:600

}

]

}

=== END OUTPUT

CloudCatch log group querying

- Go to CloudWatch and choose ‘Log groups’ in the menu. Select the destination log group you created for the flow logs, choose the specific log group in the selection box next to it, and click on ‘View in Logs Insights’.

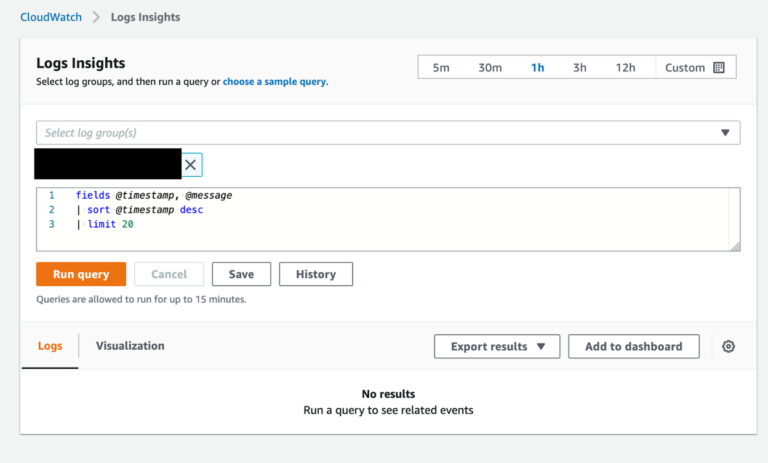

- Upon entering Logs Insights, you will see a page like this one:

Query syntax for flow logs in CloudWatch Insights

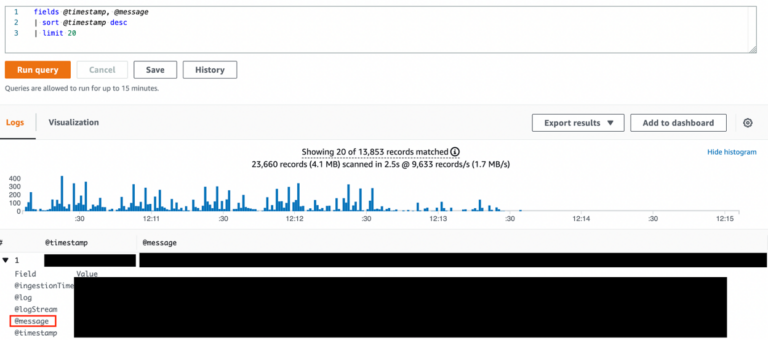

CloudWatch Insights has a specific syntax for querying. Also called InsightsQL, It is similar to SQL syntax. For flow logs, we need to understand how the flow log record structure is built before starting to build a query for it.

Log group record structure seen in cloudwatch logs.

The message field contains the flow log record message and is the field we need to analyze. The message body is built by the log record format (order included) we saw in the ‘describe flow logs’ output in the AWS CLI (Reminder).

The logic of the query is to break the message body into distinctive segments, alias each segment, filter according to our needs, and build a more organized output table for us to understand.

We will use this example query and work to understand each line:

=== START CODE ===

parse @message “* * * * * * * * * * * * * * * * * * * *” as account_id, action, bytes, dstaddr, dstport, end, flow_direction, instance_id, interface_id, log_status, packets, pkt_dst_aws_service, pkt_dstaddr, pkt_src_aws_service, pkt_srcaddr, protocol, srcaddr, srcport, start, traffic_path

| stats sum(packets) as packetsTransfered by flow_direction, srcaddr, dstaddr, protocol, action

| sort packetsTransfered desc

| limit 5

=== END CODE ===

- Parse @message.

- === START CODE ===

parse @message “* * * * * * * * * * * * * * * * * * * *” as account_id, action, bytes, dstaddr, dstport, end, flow_direction, instance_id, interface_id, log_status, packets, pkt_dst_aws_service, pkt_dstaddr, pkt_src_aws_service, pkt_srcaddr, protocol, srcaddr, srcport, start, traffic_path

=== END CODE ===

The parse ‘@message’ serves to break the message body into smaller segments. The ‘*’ is the number of different individual segments taken from the message body, so it is VERY IMPORTANT to match the number of ‘*’s to the aliases afterwards.

For example, a message could be built with the following fields: (srcaddr, srcport, dstaddr, dstport). If that is the case, the message will be built as follows: @message 10.0.1.45 64536 142.45.56.10 80

parse query:

=== START CODE ===

parse @message “* * * *” as srcaddr, srcport, dstaddr, dstport

=== END CODE ===

NOTE:

Remember, the order HAS to match the order shown in log record format as every ‘*’ represents a segment in the message body.

- Stats, as, by line.

- === START CODE ===

| stats sum(packets) as packetsTransfered by flow_direction, srcaddr, dstaddr, protocol, action

=== END CODE ===

The stats line represents the new formatted table we want to see as an output to our query, containing the relevant information for the situation.

For example, in the above query, we want to inspect the sum of packets (stats sum(packets)) and push it into a column and rename the column name to ‘packetsTransferred’ (as packetsTransfered) and build the structure of the output column by other fields we parsed from the message (by flow_direction, srcaddr, dstaddr, protocol, action).

NOTE: It is important to remember that we can build stats based on the fields we extract from the message parse. This means that, if we do not have critical information, like an alias for dstaddr, we cannot build out stats by dstaddr.

Example

parse “* * *” as srcaddr, dstaddr, packets

| stats sum(packets) as packetSum by srcaddr, dstaddr

- Sort line

=== START CODE ===

| sort packetsTransfered desc

=== END CODE ===

This line represents a sort by logic where we want to sort the counts stat in descending sorted column (desc) or ascending sorted column (asc).

Example

If we have a stat of counts, sum, or any other numeric metric we created in the output, we can sort it into descending or ascending order.

- Limit line.

=== START CODE ===

| limit 5

=== END CODE ===

This line represents the limit of the number of rows we want to see in our output stat column.

For example, if we write ‘| limit 5’ we will see only 5 rows in the output result. But, if we say ‘| limit 50’, we will see up to 50 rows. In cases where the end result has only 20 rows, we will only see 20 rows, even if we set the limit to 50).

- Filter line.

=== START CODE ===

//Not shown in the example but still relevant

| filter flow_direction like ‘ingress’

=== END CODE ===

The filter command helps to filter in specific field values that are relevant. So, if we only want to see the ingress traffic that goes into a desired VPC, we add a filter command so that the stat output will show only the traffic_direction that has the value ‘ingress.’

An example of query and the result:

Query and Result Example

=== START CODE ===

parse @message “* * * * * * * * * * * * * * * * * * * *” as account_id, action, bytes, dstaddr, dstport, end, flow_direction, instance_id, interface_id, log_status, packets, pkt_dst_aws_service, pkt_dstaddr, pkt_src_aws_service, pkt_srcaddr, protocol, srcaddr, srcport, start, traffic_path | stats sum(packets) as packetsTransfered by flow_direction, srcaddr, dstaddr, protocol, action | sort packetsTransfered desc | limit 5

=== END CODE ===

Final Thoughts

VPC Flow Logs are a critical component of any security and compliance strategy for AWS users. It offers a simple yet powerful way to capture and analyze network traffic within the VPC, providing valuable insights into potential security threats and network performance issues. By enabling VPC Flow Logs, users can gain more control and visibility over their AWS environment and take proactive measures to safeguard against cyber threats. VPC Flow Logs is an essential tool for any organization using AWS and should be a part of their overall security and compliance strategy.