What Is Cloud Composer?

What Is Cloud Composer?

Google Cloud Composer is a fully managed version of the popular open-source tool, Apache Airflow, a workflow orchestration service. It is easy to get started with, and can be used for authoring, scheduling, monitoring, and troubleshooting distributed workflows. The integration with other Google Cloud services is another useful feature. It is free from vendor lock-in, easy to use, and brings great value for organizations that are willing to orchestrate their batch data workflows.

Cloud Composer environments run on top of a Google Kubernetes Engine (GKE) cluster. The pricing model is customer friendly— you simply pay for what you use.

Google’s Cloud Composer allows you to build, schedule, and monitor workflows—be it automating infrastructure, launching data pipelines on other Google Cloud services as Dataflow, Dataproc, implementing CI/CD and many others. You can schedule workflows to run automatically, or run them manually. Once the workflows are in execution, you can monitor the execution of the tasks in real time. We’ll discuss workflows in greater detail later in this article.

Features of Google Cloud Composer

The main features of Google Cloud Composer include:

- Simplicity: Cloud Composer provides easy access to the Airflow web user interface. With just one click you can create a new Airflow environment.

- Portability: Google Cloud Composer projects are portable to any other platform by adjusting the underlying infrastructure.

- Support for hybrid cloud operations: This feature combines the scalability of the cloud and the security of an on-premise data center.

- Support for Python: Python is a high-level, general-purpose, and interpreted programming language for big data and machine learning. Since Apache Airflow is built using Python, you can easily design, troubleshoot, and launch workflows.

- Seamless Integration: Google Cloud Composer provides support for seamless integration with other Google products, such as BigQuery, Cloud Datastore, Dataflow and Dataproc, AI Platform, Cloud Pub/Sub, and Cloud Storage via well-defined APIs.

- Resilience: Google Cloud Composer is built on top of Google infrastructure and is very fault-tolerant. As an added benefit, its dashboards allow you to view performance data.

Cloud Composer is based on the well-known Apache Airflow open source project, and it can be used to create cloud workflows in Python. In the next section, we’ll discuss the components of Apache Airflow.

Components of Apache Airflow

Apache Airflow is a workflow engine that allows developers to build data pipelines with Python scripts. It can be used to schedule, manage, and track running jobs and data pipelines, as well as recover from failures.

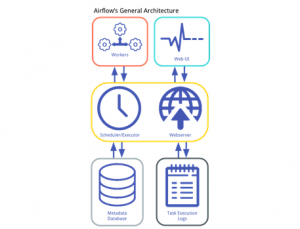

The main components of Apache Airflow are:

- Web server: This is the user interface, i.e., the GUI of Apache Airflow. It is used to track the status of jobs.

- Scheduler: This component is responsible for orchestrating and scheduling jobs.

- Executor: This is a set of worker processes that are responsible for executing the tasks in the workflow.

- Metadata database: This is a database that comprises metadata related to DAGs, jobs, etc.

Apache Airflow Use Cases

Apache Airflow can be used with any data pipelines and is a great tool for orchestrating jobs that have complex dependencies. It has quickly become the defacto standard for workflow automation and is being used in many organizations worldwide including Adobe, Paypal, Twitter, Airbnb, Square, etc.

Some of the popular use cases for Apache Airflow include the following:

- Pipeline scheduler: This is Airflow’s support for notifications on failures, execution timeouts, triggering jobs, and retries. As a pipeline scheduler, Airflow can check the files and directories periodically and then execute bash jobs.

- Orchestrating jobs: Airflow can help to orchestrate jobs even when they have complex dependencies.

- Batch processing data pipelines: Apache Airflow helps you create and orchestrate your batch data pipelines by managing both computational workflows and data processing pipelines.

- Track disease outbreaks: Apache Airflow can also help you to track disease outbreaks.

- Train models: Apache Airflow can help you to manage all tasks in one place, including complex ML training pipelines.

What Problems Does Airflow Solve?

Apache Airflow is a workflow scheduler that easily maintains data pipelines. It solves the shortcomings of cron and can be used to organize, execute, and monitor your complex workflows. Cron has long been in use for scheduling jobs, but Airflow provides many benefits cron can’t offer.

One difference is that Airflow allows you to easily create relationships between tasks, as well as track and monitor workflows using the Airflow UI. These same tasks can present a challenge in cron because it requires external support to manage tasks.

Because it provides excellent support for monitoring, Airflow is the best scheduler for data pipelines. While cron jobs cannot be reproduced, Apache Airflow maintains an audit trail of all tasks that have been executed.

Apache Airflow integrates nicely with the services that are present in the big data ecosystems—including Hadoop, Spark, etc.—and you can easily get started with Airflow as all code is written in Python.

What Makes Apache Airflow the Right Choice for Orchestrating Your Data Pipelines?

Apache Airflow offers a single platform for designing, implementing, monitoring and maintaining your pipelines. This section examines what makes Airflow the right scheduler for orchestrating your data pipelines.

Monitoring

Apache Airflow supports several types of monitoring. Most importantly, it sends out an email if a DAG has failed. You can see the logs as well as the status of the tasks from the Airflow UI.

Lineage

Airflow’s lineage feature helps you track the origins of data and where the data moves over time. This feature provides better visibility while at the same time simplifying the process of tracing errors. It is beneficial when you have several data tasks that are reading and writing into storage.

Sensors

A sensor is a particular type of operator that waits for a trigger based on a predefined condition. Sensors can help a user trigger a task based on a specific pre-condition, but the sensor and the frequency to check for the condition should be specified. In addition, airflow provides support for the customization of operators, which can help you create your operators and sensors if the current ones don’t meet your needs.

Customization

Using Airflow, you can create operators and sensors if the existing ones don’t satisfy your requirements. In addition, airflow integrates nicely with all the services in big data ecosystems such as Hadoop, Spark, etc.

What are Workflows, DAGs, and Tasks?

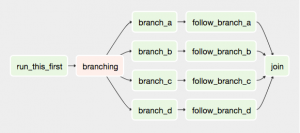

Apache Airflow Directed Acyclic Graphs, also known as DAGs, are workflows created using Python scripts and are a collection of organized tasks to be scheduled and executed. DAGs are composed of several components, such as DAG definition, operators, and operator relationships.

A task in a DAG is a function to be performed, or a defined unit of work. Such functions can include monitoring an API, sending an email, or executing a pipeline.

A task instance is the individual run of a task. It can have state information, such as “running,” “success,” “failed,” “skipped,” etc.

Cloud Composer Architecture

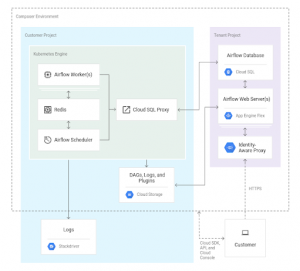

The main components of Cloud Composer architecture include Cloud Storage, Cloud SQL, App Engine, and Airflow DAGs.

Cloud Storage is a bucket that stores the Airflow DAG plugin, data dependencies and relevant logs. This is where you can submit your DAGs and code.

Cloud SQL stores Airflow metadata and is backed up on a daily basis. The app engine hosts the Airflow webserver, and allows you to manage access to it using the Cloud Composer IAM policy. And finally, Airflow DAGs, also known as workflows, are a collection of tasks.

Benefits of Cloud Composer

Here are the benefits of Google Cloud Composer at a glance:

- Simple configuration: If you already have a Google Cloud Account, configuring the Google Cloud Composer is just a couple of clicks away. While the Google Cloud Composer project is being loaded, you can select the Python libraries you want to use and easily configure the environment to your preferences.

- Python integration and support: Google Cloud Composer integrates nicely with Python libraries and supports newer versions of Python, such as Python 3.6.

- Seamless deployment: Google Cloud Composer projects are built using Directed Acyclic Graphs (DAGs) stored inside a dedicated folder as part of your Google Cloud Storage. To deploy, you can create a DAG using the available components in the dashboard. Then, just drag-and-drop the DAG to this folder. That’s all you have to do, the service takes care of everything else.

While a benefit of using a managed service like Google Cloud Composer is that you don’t have to configure the infrastructure yourself, this means you have to pay more for a ready-made solution. Also, Cloud Composer has a relatively limited number of supported services and integrations . You’d need more in-depth knowledge of Google Cloud Platform to be able to troubleshoot DAG connectors.

Getting Started

In this section we’ll learn how to set up the Cloud Composer, orchestrate the pipelines, and trigger Dataflow jobs.

Setting Up Cloud Composer

First, enable the Cloud Composer API and create a new environment by clicking on “Create.” Next, fill in all required details of the environment. These include name and location of the environment, node, machine type, disk size, version of Python, image version, etc.

The setup process takes some time to complete, and completion will be indicated by a green check mark, as shown in Figure 4.

Deployment of a DAG

Cloud Composer stores the DAGs in a Cloud Storage bucket—this enables you to add, edit, and delete a DAG seamlessly. Note that a Cloud Storage bucket will be created when you create an environment.

You can deploy Google Cloud Composer through manual deployment or automatic deployment. If you’re going to deploy DAGs manually, drag and drop the Python files (having a .py extension) to the DAGs folder in Cloud Storage. Alternatively, you can set up a continuous integration pipeline so that the DAG files are deployed automatically.

Orchestrating Google Cloud’s Data Pipelines with Cloud Composer

Cloud Composer can orchestrate existing data pipelines implemented by native Google Cloud services like Data Fusion or DataFlow.

Cloud Data Fusion is a fully managed data integration service from Google. If you have a Data Fusion instance and a deployed pipeline ready, you can trigger it from Cloud Composer using the CloudDataFusionStartPipelineOperator operator.

Cloud Dataflow is a Unified stream and batch data processing that’s serverless, fast, and cost-effective – based on Apache Beam. To trigger Dataflow jobs you can use operators such as DataflowCreateJavaJobOperator or DataflowCreatePythonJobOperator.

Summary

Google Cloud Composer is based on the open-source workflow engine, Apache Airflow, which enables you to build, schedule, and monitor jobs easily. Not only can Cloud Composer help you operate and orchestrate your data pipelines; it integrates nicely with several other Google products via well-defined APIs.

Simplicity, portability, easy deployment, and multi-cloud support are just a few of the benefits Google Cloud Composer offers.