About two months ago we had an opportunity to build a multi-cloud Kubernetes solution for a client that need to run elastic (in quantity, frequency and run time) image processing workloads with TensorFlow.

The first cloud provider they wanted to start with was Azure.

I want to share with you our journey here at CloudZone, as I haven’t found anything yet on the Internet that can provide such a technological solution for this specific need.

First, we can start with the fact that AKS was planned as a managed Kubernetes solution for the job, but we quickly understood that it still lacked three major features to achieve our goal:

- Cluster autoscaler for node pools is still in preview state — we need to be able to scale from zero to X at any time. Only thing that should stop us is Azure account resource limits. In addition to that, AKS VMSS node pool cannot be size zero.

- Low priority virtual machine scale sets (VMSS) — we require to run job type workloads that can benefit from LPVM in terms of cost.

- Multiple node pools to run mixed workloads — we need to run various kinds of pods. Some of them will need very small amount of resources and some of them will need a powerful Nvidia Tesla V100 GPU and a lot of RAM, using “Standard_NC6s_v3” compute instances.

One of the immediate alternatives on the horizon was Spotinst Ocean, but this is a third party product that cannot be paid with Azure credits and the idea was not to pay real money for the compute resources used in Azure.

Another solution, which is kubespray has Azure support, but will not work with pulling configuration, where we need nodes to come up and down all the time.

Another option was a DIY cluster setup with kubeadm, but after some time we found that this solution was too complex and chasing after all Azure API aspects and bootstrap phases (main method was creating node images with Packer that had all dependencies, setup CNI and joining the cluster with cloud-init script upon start) was taking way too much time from the project.

After some trial and error, we came back to a solution that is used by Spotinst Ocean and can provide us all the features we need until AKS will become a mature enough product. It was important for us that this solution is supported by Microsoft too.

We started to use AKS-Engine.

AKS-Engine provided us the features that are still not available in AKS, and more. We can create a highly available control plane, external load balancer, use latest version of Kubernetes and many more enhancements to our Kubernetes infrastructure.

AKS-Engine is using a single Go binary that accepts an API module input JSON file and generate an ARM template (that use many of the methods we used in our DIY cluster attempt and more) then deploy the template to create a fully featured Kubernetes cluster with Azure resources. This behaviour is similar to how KOPS create Kubernetes resources in AWS.

In order to start working with it, it’s required to authenticate against your Azure subscription with Azure CLI, create a Service Principal account and run it against your subscription either with a existing resource group or creating a new one.

Our API module JSON looked like this:

Anatomy of the API module file:

General:

See MS documentation here.

On the first code block we have “apiVersion” and “orchestratorType” that defines the resource we want to create. AKS-Engine is an evolution of ACS-Engine and can work with multiple container orchestration engines.

Kubernetes version is defined in “orchestratorRelease”, otherwise we stuck at aks-engine default, which is 1.12 at this time.

Add-ons:

Next step is to define which Kubernetes add-ons we want to use in our cluster and their parameters. Examples for add-ons can be found here.

Right now, we use two add-ons: Cluster-Autoscaler and Nvidia Daemonset.

- Cluster-autoscaler add-on allows us to use cluster-autoscaler for Azure. AKS-Engine will take care of setting it up in Kubernetes once we include it in the API model input file. We provide min and max instances per cluster (the setup is global as it doesn’t support per VMSS setting this day) then the scan interval, where it will check of nodes need to be added or removed following resource requests from pods.

- Nvidia plugin takes care of device driver and Docker engine runtime settings on each node so we can use GPU resources. Upon node inspection, we should see “nvidia.com/gpu: 1” in addition to CPU, RAM and disk resources. Read more about it here and here.

Control plane profile (master nodes):

- We can install a distributed control plane with aks-engine, that will take care of etcd, availability set and internal/external load balancers for all the master nodes. In our case, a 3 nodes control plane is sufficient enough and its defined in “count”.

- Our DNS prefix will be defined in “dnsPrefix” so a domain under “YOUR_NAME.REGION_NAME.cloudapp.azure.com” will be registered.

- We need to define the size of the compute instances that are going to be used in “vmSize”.

- When we deploy the cluster into a custom VNET, we need to define the resource of the target subnet in “vnetSubnetId”.

- Starting IP address will be defined in “firstConsecutiveStaticIP” to prevent collision with other resources. Each master nodes allocates a lot of private address upon setup.

Data plane profile (worker nodes):

- The node pool we create first is for general purpose workloads that don’t require much resources and a GPU to operate. Next are the GPU enabled node pools that run with Nvidia Tesla V100 compute instances.

- We provide the pool a name that can be used in VMSS creation with “name”.

- Minimum number of instances defined in “count”. Although VMSS support zero instances size, the node pool cannot be created with zero instances in ask-engine yet. Cluster autoscaler will take care of scale to zero later on.

- VMSS, that is created with less than 100 instances, will not scale to more than max 100 until multiple placement groups will be set for it. See more details here. Therefore, we set it to false in “singlePlacementGroup”.

- Just like with master nodes, we define VM size for each pool in “vmSize”.

- Because we are working with VMSS, we need to define the “availabilityProfile”. Each new instance coming up or down will be registered with cluster autoscaler (using azure.json with it’s kubelet) so adding and removing nodes will be in sync as both Kubernetes node objects and VM’s inside the VMSS. When we describe such node, we can observe the following:

ProviderID: azure:///subscriptions/****/resourceGroups/aks-canadacentral/providers/Microsoft.Compute/virtualMachineScaleSets/k8s-gencanada-10448498-vmss/virtualMachines/0

- Managed disk for VMs is defined in “storageProfile”.

- General and Fallback nodes can run on-demand, while the main load should be running with low priority VMs. This is defined in “scaleSetPriority” and removing evicted VMs with “scaleSetEvictionPolicy” so we will not confuse our autoscale. See Kubernetes docs for how node affinity is set to achieve this.

- Node pool labels that we use for node selector in deployment and affinity rules (to prefer run workload on LPVM and then on-demand) are defined in “customNodeLabels”.

- As with master nodes, we need to provide the subnet ID where they will run with “vnetSubnetId” for each node pool.

Linux instances profile:

Because this is not a managed solution, all VMs within the the Kubernetes cluster can be managed with SSH. Every instance in the VMSS can be also accessed with 5000X port with VMSS LB NATN rule.

In order to supply credentials for that, we need to provide an SSH login user with “adminUsername” and a public key in “keyData”. Credentials will be added to VMs with cloud-init, upon creation.

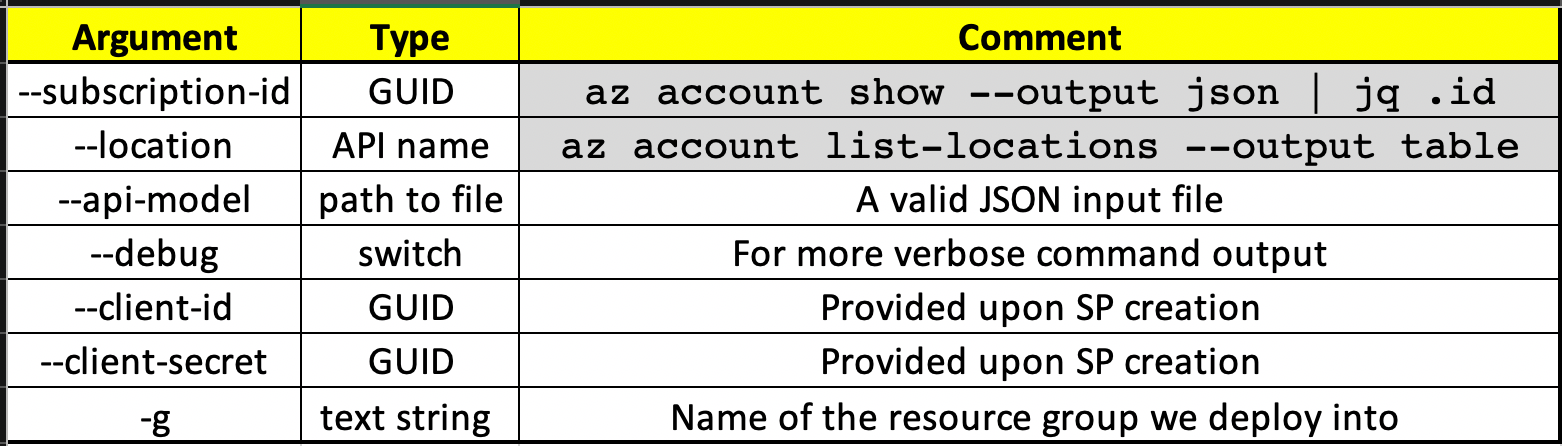

Running the “aks-engine” command:

Command structure:

aks-engine deploy --subscription-id [SUBSCRIBTION_ID] --location [LOCATION] --api-model [INPUT_FILE].json --debug --client-id [SP_USER_GUID] --client-secret [SP_PASSWORD_GUID] -g [RESOURCE_GROUP_NAME]

Kubernetes resources:

Using ACR as a Docker image registry:

Azure provides a managed container image registry (ACR) that can be used by Kubernetes. In order to pull images from it, we need to create another Service Principal account that will allow us to only run pull commands within the resource scope of the registry.

This credential will be used as secret API object for all our pull operations and define in our deployment:

imagePullSecrets:

— name: acr-auth

Fallback to on-demand if LPVM is not available:

Low priority VMs has no SLA or guarantee to be provisioned at all, so we need to make sure that we can fallback to on-demand when LPVM are not available.

We achieve this with node affinity in the deployment API resource (remember that we provide labels for out two node pools). Here is our example:

Running GPU workloads only on nodes with GPU and vice versa:

We use node selection deployment to pin which deployments will run on which node (with the power of labels) pools:

nodeSelector: gpu: “true”nodeSelector: general: “true”

Putting it all together:

- Run aks-engine and create the cluster.

- Use “_output/[YOU_CLUSTER_NAME]/kubeconfig/kubeconfig.[LOCATION].json” to connect to Kubernetes API, export it as $KUBECONFIG inside your shell or merging into your existing “~/.kube/config”:

KUBECONFIG=~/.kube/config:_output/[YOU_CLUSTER_NAME]/kubeconfig/kubeconfig.json

kubectl config view --flatten

3. Apply your API resources with “kubectl” (You can also use helm as tiller is already installed with the cluster).

4. ???

5. Profit!

Feel free to contact me at yevgenytr@cloudzone.io, LinkedIn or here.