Recently, I’ve had the pleasure of working alongside a group of subcontractors in charge of creating the first cloud-based public data portal available to the public. We set out to create a solution, which we didn’t know at the outset if it was feasible or not. It was a mighty endeavour, and of course, we had a lot of pressure to meet deadlines and complete the work on a very tight schedule.

We didn’t let any of that interfere with our mission. Our mission was clear: Make CKAN — a common Data Portal solution — deployable and securable using GKE and managed services where possible. We had organizational users who will need to access the application securely from their premises. We also had one programmatic user, an automated file uploader using rsync over ssh.

And so we set off and started researching how CKAN has traditionally been deployed, what kind of experiences were other implementers reporting on using it, reviewed its Docker-related documentation, and gathered as much background information as we could to avoid common pitfalls and unnecessary delays. We also discovered several attempts by such implementers using Helm Charts, which we thought would be a good idea. We ended up not using any of their solutions, but we certainly drew knowledge from experimenting with them to the extent that we were able to author our own Helm Chart and make it work on Google’s cloud-provided Kubernetes cluster. We even hooked it up with Filestore, Google’s managed NFS, to facilitate batch uploads securely.

Here’s how we ended up stitching the Helm Chart together. Kudos to everyone who pitched in!

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Values.fullNameOverride }}

labels:

app: {{ .Values.fullNameOverride }}

spec:

replicas: {{ .Values.deploy.replicas }}

selector:

matchLabels:

app: {{ .Values.fullNameOverride }}

template:

metadata:

labels:

app: {{ .Values.fullNameOverride }}

spec:

containers:

— name: {{ .Values.fullNameOverride }}

image: {{ .Values.deploy.image.registry}}/{{ .Values.fullNameOverride }}:{{ .Values.deploy.image.tag}}

imagePullPolicy: {{ .Values.deploy.image.pullPolicy}}

ports:

— containerPort: 5000

protocol: TCP

resources:

{{ toYaml .Values.deploy.resources | indent 12 }}

volumeMounts:

— mountPath: /etc/ckan/config.ini

name: config-volume

subPath: config.ini

— mountPath: /usr/lib/ckan/venv/src/ckan/upload_files/

name: ckan-files

volumes:

— configMap:

defaultMode: 420

name: {{ .Values.fullNameOverride }}

name: config-volume

— name: ckan-files

nfs:

path: {{ .Values.nfs.path }}

server: {{ .Values.nfs.server }}

Service

apiVersion: v1

kind: Service

metadata:

labels:

app: {{ .Values.fullNameOverride }}

name: {{ .Values.fullNameOverride }}

spec:

ports:

- port: 80

protocol: TCP

targetPort: {{ .Values.service.port }}

{{ if eq .Values.service.type "NodePort" }}

nodePort: {{ .Values.service.NodePort }}

{{ end }}

selector:

app: {{ .Values.fullNameOverride }}

sessionAffinity: None

type: {{ .Values.service.type }}

Ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: {{ .Values.fullNameOverride }}

annotations:

kubernetes.io/ingress.global-static-ip-name: "RESEERVED_IP_NAME"

spec:

backend:

serviceName: {{ .Values.fullNameOverride }}

servicePort: {{ .Values.service.port }}

path: /

loadBalancerSourceRanges:

- *RESERVED_IP*/32

Configmap

apiVersion: v1 kind: ConfigMap data: config.ini: |- {{ if eq .Release.Namespace "env1" }} {{ .Files.Get "files/env1.ini" | indent 4 }} {{ end }}{{ if eq .Release.Namespace "env2" }} {{ .Files.Get "files/env2.ini" | indent 4 }} {{ end }}{{ if eq .Release.Namespace "env3" }} {{ .Files.Get "files/env3.ini" | indent 4 }} {{ end }}{{ if eq .Release.Namespace "env4" }} {{ .Files.Get "files/env4.ini" | indent 4 }} {{ end }}metadata: name: {{ .Values.fullNameOverride }}

CKAN is a distributed application which employs 4 significant services:

1. Backend web server(s)

2. PostgresSQL database server(s)

3. Solr indexing and search server(s)

4. Frontend load balancer/web server(s)

We decided to keep only the backend server component in an unmanaged container hosted on GKE. The rest of the services we found managed solutions for, including Cloud SQL for the PostgresSQL database, Bitnami-based HA Solr with Zookeeper, and an HTTP(S) Load Balancer provided in GCP as a service for the frontend.

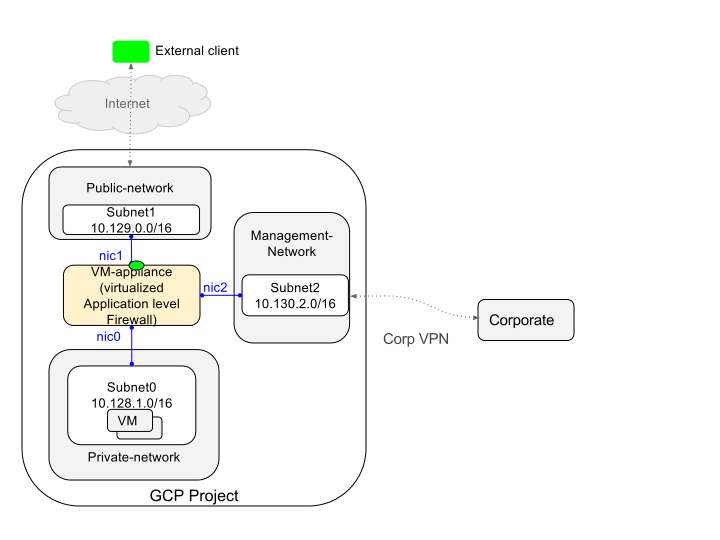

To accommodate the security requirements set forth by the client, we had to install a software “next-generation” web application firewall. The chosen product was Reblaze, a local development, which meant support during the project was responsive and fluid. This was at the crux of our architecture for the solution and represented the main entry point for all incoming communication to the application.

We constructed a separate VPCs for Application, Security, and Management. In order to allow these networks to communicate with one another we set up VPC peering. The main administrative endpoint was a bastion host we spun up where only authorized use was enforced. This machine was where we wrote most of our infrastructure code (Using Google’s Source Repos) and managed our Kubernetes cluster.

We had to overcome many obstacles before the project was over. There was so much to do and very little time, we had a mission after all. And it was a straightforward one. So we sewed up all the underlying services and we set up everything to connect and allow the types of communications we had outlined. The last details were still being ironed out the day before we flipped the switch. We had to cut some corners, and carry some technical debt past our launch date, but we launched on schedule. Our mission was accomplished.

The project is far from over. We still need to implement the Devops processes that will allow the client’s developers to enjoy CI/CD over their new Dockerized CKAN server. And to secure those processes using the fresh installation we got from the Google Marketplace of Aqua Security. A wonderful cloud-security manager for Kubernetes which has already proved its worth in tracking and authorizing deployable artifacts, providing endpoint security, container firewalls, and much more.